How many applications do plant floor personnel need to learn to do their job? The title of my blog is Dickensian enough, so I won't open with a cheesy "best of times, worst of times" parody. This is the first in a series discussing the impact of technology, production processes, and the intersection of where they meet – the production workers on the factory floor. The story begins with two plants – Deuxieme Botte Manufacturing (DBM) and Premiere Chaussure Industries (PCI). We are introduced to two workers: Don is a maintenance technician at DBM, and Brad has a similar position at PCI. Let's take a look at a typical morning for each of them.

Morning at DBM

Don began his day just like every other; he stopped by his desk to see what work orders he needed to complete during his shift. He found three in his inbox – all preventive maintenance work. He picked the one he knew would take the longest, popped his head in the maintenance supervisor's office and said "'Mornin' Jeff – I'm headed off to Assembly Machine 3 to take care of the PM work."

"What work order number is that?" asked Jeff. "I need to make sure it's entered into the maintenance dispatch system."

Don knew the WO was already in the system, but read it off of the paperwork for Jeff anyway. "Yep, it's there!" Jeff said. "Let me know when you're done and I'll update the status in the system." "Will do" said Don as he headed out the door. As he pushed his tool cart to the assembly lines, he began reading the details of the work order. "Hmmm, guess I'll need to stop by MRO to pick up the parts kit for this PM".

When he got to MRO, Don showed the attendant Debbie the work order and asked for the part kit. "I'm going to have to look this up by kit number to make sure we give you the right one – you know, correct storage bin number and all" she said. As he waited, Don thought about what an improvement it was getting PM parts pre-kitted over requesting each part individually like they used to.

"Hey Deb – you going to be long?" ("Maybe there's time for coffee", he thought to himself.)

"Well, the system seems a bit slow today. This usually only takes a few minutes, but for some reason it's taking longer today! Finally – ", she said with dramatic flair, "- here it is. Could you fill out the requisition form using kit #80054124?"

"Oh great – I don't have a charge number on this work order. Deb, do you happen to know the department charge for Assembly?"

"I think I have it on a list here somewhere…here it is", she says.

"Thanks Deb. I don't know why we can't just use the work order number instead of a department charge. Life would be so much easier." He completed the paperwork and returned it to Deb.

"We can ask IT to make that change, but you know how long that will take" Deb replied.

"Yeah, I love my job" muttered Don. He was being only partially sarcastic; he mostly enjoyed his work but it seemed to him that more and more of what he did was to feed a system that really only provided benefit to the bean counters somewhere in the front office. Things that would make his life easier got "prioritized" and placed in a queue until IT could assign resources to make the necessary system changes.

Deb laughed and handed Don the part kit, and he was off to the Assembly area. About 30 minutes into the job, he happened to notice Jeff approaching.

"Hey Don! I've been trying to get ahold of you! We've got a machine down in Parts and I need you to get over there right away! Why didn't you answer your cell phone? We gave them to you so we could reach you immediately!"

Don looked at the phone sitting on his tool cart. The PM job required reaching into some tight spaces, so he had taken the phone off his belt. Assembly is a noisy area, so he couldn't hear the phone ring. "I love my job", he thought to himself. "OK – give me two minutes and I'll be there." He looked for the nearest Maintenance Dispatch display board to see which machine was down, gathered up his tools and was off.

Morning at PCI

Brad stopped by his locker and fit his Bluetooth-enabled personal protective equipment – which interacted with his smart device - over his eyes and ears. He used to have a desk, but since he hadn't really used it in over a year he had given it away. "Good morning, Smarty" he said to his personal digital assistant. He named the device "Smarty" because he didn't want to play favorites among the competing technologies, and it really was a smart device. It interfaced directly with the enterprise systems and actually helped him do his work. "What's on my 'to-do' list for the day?" he asked.

The device responded "Good morning Brad. The first job on your list is a preventive maintenance task on Assembly Machine #4. The part kit was delivered to the department and is waiting by the machine, and I have the procedure list ready when you need it. Would you like to hear about the other tasks on your list?"

"Later Smarty. I'm off to work on the assembly machine." Brad grabbed his tools and headed directly to Assembly Machine #4 and began working.

After some time, Smarty interrupted Brad. "Excuse me Brad, but your assistance is needed. An operator has reported a parts machine down. The upstream buffer for this machine has space available, but the downstream buffer will be empty in 15 minutes. This will cause other processes needed to meet schedule to shut down, which elevates the priority over the PM task you are currently working on."

"OK Smarty – which machine is it?" asked Brad.

A plant map appeared in Brad's protective eyewear; the breakdown glowed red on the map, along with a green routing from Brad's current location to the machine requiring his attention. Brad first made sure his LOTO was secure on the assembly machine and then headed for parts, where he was greeted by Betty, the machine operator. "Hi Brad. Wow – that was fast!" she said. "I just reported the breakdown five minutes ago!"

"Yeah, Smarty here told me it was kinda urgent" said Brad, pointing to his smart device.

Two Worlds

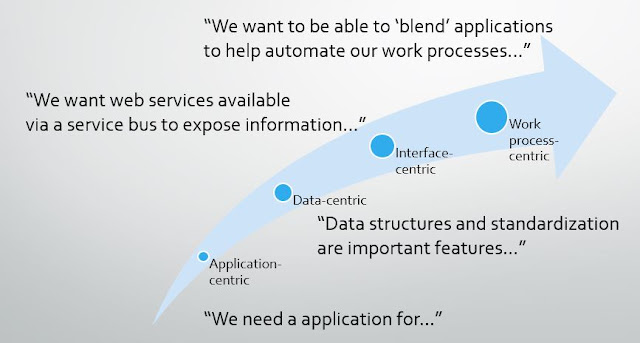

There are many comparisons to draw between Don's world at DBM and Brad's at PCI. Both have similar systems in place, but in Brad's world the artifacts of the technology – things such as work orders and department charges – are invisible at the worker level. Even the systems themselves are not apparent to the technician. He has one interface – his smart device – which ties all the enterprise systems (such as ERP, CMMS, MES/MOM, and PLM) together. They collaborate to help him do his job. In contrast, Don's world is so cluttered with these artifacts that he cannot imagine a world without them. He has log-ins for all of the systems, and knows a few transactions in each. He even keeps instructional "cheat sheets" in his tool cart for reference when he accesses systems on the plant floor. He may have to search for an available PC on the floor to interact with the enterprise systems, which is a "hit or miss" proposition; an operator may have the PC tied up doing quality checks, or a supervisor may be performing a downtime study during his gemba. Feeding the technology has been bolted onto his work processes.

Which factory is more competitive? Which factory is seeing greater technology ROI? The world of the PCI factory seems like science fiction, but every technology that enables it currently exists and is readily available. Many can identify with (and many more may even be envious of) the world of DBM, but cannot see the path to the PCI world. What strategies are in play? Who owns them? Who drives them? How did management become convinced to fund this approach? What additional technologies are needed, or how can existing technologies be re-deployed? These questions will be explored in future postings in this series.